JST Workshop

20th March 2011, SUNTEC Convention Centre, Singapore (to get there)Location: Room 304, Level 3, Suntec Singapore

International Convention & Exhibition Centre

1 Raffles Boulevard, Suntec City,

Singapore 039593

Program

Opening Address (SUNTEC, Room 303 &304)9:00 – 9:45

- Prof. Susumu Tachi (Keio Univ.)

- Mr. Atsuya Yamashita (JST)

- Prof. C.C. Hang (NUS)

- Prof. Masa Inakage (Keio Univ.)

9:45 – 11:45

- Prof. Henry Fuchs (UNC) 9:45 – 10:30

- Prof. Thomas DeFanti (UCSD) 11:00 – 11:45

(SUNTEC Room 303)

13:30 - 15:00

Speakers:

- Prof. Yoichiro Kawaguchi (Univ. of Tokyo)

- Prof. Yasuyuki Yanagida (Meijo Univ.)

- Dr. Maki Sugimoto (Keio Univ.)

- Prof. Masanori Sugimoto (Univ. of Tokyo)

- Prof. Shoichi Hasegawa (Tokyo Inst. of Tech.)

(SUNTEC, Room 303)

15:15 – 16:45

Speakers:

- Prof. Susumu Tachi (Keio Univ.)

- Prof. Masatoshi Ishikawa (Univ. of Tokyo)

- Prof. Yasuharu Koike (Tokyo Inst. of Tech.)

- Prof. Shiro Ise (Kyoto Univ.)

- Dr. Atsushi Nakazawa (Osaka Univ.)

12:45 – 18:00

- Exhibition by Ishikawa Laboratory

- Exhibition by Koike Laboratory

- Exhibition by Tachi Laboratory

- Exhibition by Kawaguchi Laboratory

- Exhibition by Yanagida Laboratory

- Exhibition by Hasegawa Laboratory

- Exhibition by Hoshino Laboratory

18:00 – 21:00

JST Workshop Details

20th March 2011, SUNTEC Convention Centre, SingaporeProgram :

Opening Address (SUNTEC, Room 303 &304) |

|||

| Prof. Susumu Tachi (Keio Univ.) | Mr. Atsuya Yamashita (JST) | Prof. C.C. Hang (NUS) | Prof. Masa Inakage (Keio Univ.) |

|

|

|

|

Invited Talk (SUNTEC, Room 303 & 304) |

|||

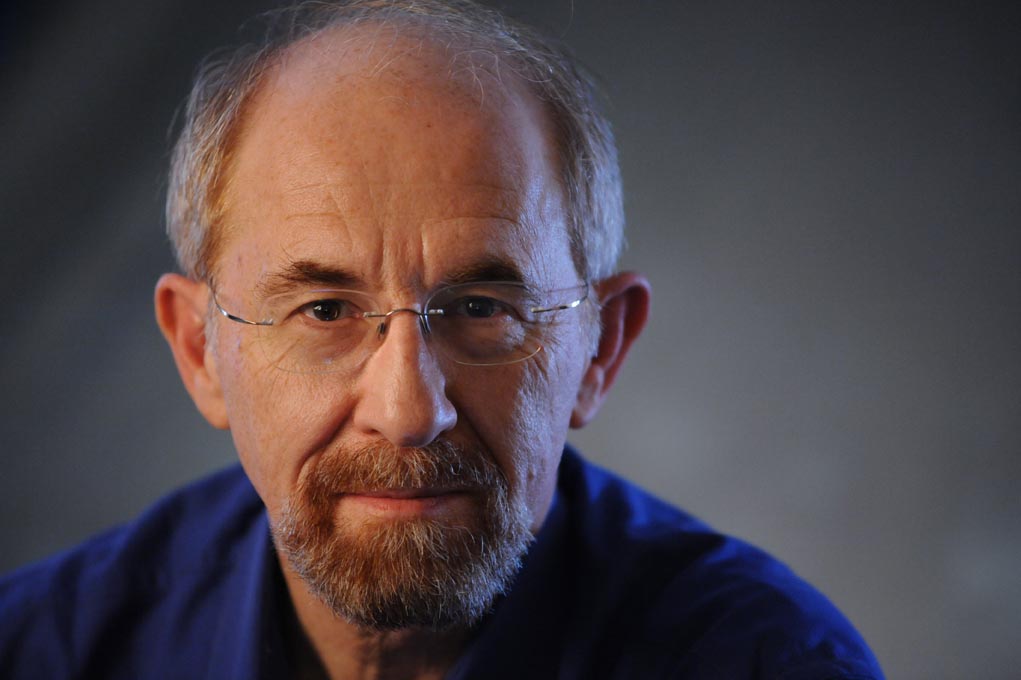

| Prof. Henry Fuchs (University of North Carolina at Chapel Hill) | The Future of Virtual Reality Technologies | ||

|

From its birth in the 1960s, Virtual Reality has been closely dependent on the availability of certain specialized technologies: real-time image generation, real-time tracking, miniature displays. Lack of these technologies made the initial attempts, beginning in the late 1960s, so difficult that only a few experimental systems were built until the late 1980s, when a combination of pocket TVs (which could be used for head-mounted displays) and real-time graphics workstations enabled an actual field called "virtual reality" to emerge. In the 1990s, the widespread availability of graphics-enhanced PCs and miniature displays fueled the VR boom of that decade. Affordable video projectors in the late 1990s enabled room-sized immersive environments. In the past few years smart phones with cameras, accelerometers and other sensors have spawned a boom in augmented reality applications on such devices. The latest promising device, the Microsoft Kinect depth camera, introduced in November 2010, may fuel the next expansion in VR systems and applications. Its real-time depth and color imagery, combined with affordability and easy interfacing, has inspired a following of experimentalists within days of its availability. It enables interaction with a local user's body without the user needing to wear (or hold) any encumbrances, and its real-time depth and color imagery enables 3D scene capture for augmented reality and telepresence applications. With this recent history of new technologies reinvigorating the field, VR is in a transition, from only a few systems being built, with enormous resources and heroic effort, toward a situation in which the necessary resources are so inexpensive that even individuals can personally fund and develop experimental VR systems -- from a situation in which most can only admire systems from afar, to one in which anyone can readily replicate another's work and build new ideas on top of it. It is this explosion in the size and vigor of the community that promises to transform VR from a minor media curiosity to a field with major creative, technical, economic, and societal impact. |

||

| Prof. Thomas DeFanti (University of California, San Diego) | The Future of the CAVE | ||

|

CAVE, a walk-in virtual reality environment typically consisting of 4-6 3m-by-3m sides of a room made of rear-projected screens, was first conceived and built twenty years ago, in 1991. In the nearly two decades since its conception, the supporting technology has improved so that current CAVEs are much brighter, at much higher resolution, and have dramatically improved graphics performance. However, rear-projection-based CAVEs typically must be housed in a 10m-by-10m-by-10m room (allowing space behind the screen walls for the projectors), which limits their deployment to large spaces. The CAVE of the future will be made of tessellated panel displays, eliminating the projection distance, but the implementation of such displays is challenging. Early multi-tile, panel-based, virtual-reality displays have been designed, prototyped, and built for the King Abdullah University of Science and Technology (KAUST) in Saudi Arabia by researchers at the University of California, San Diego, and the University of Illinois at Chicago. New means of image generation and control are considered key contributions to the future viability of the CAVE as a virtual-reality device. (From DeFanti, et. al., “The Future of the CAVE,” CEJE(1).) |

||

Session 1 Foundation of Technology Supporting the Creation of Digital Media Contents (SUNTEC Room 303) |

|||

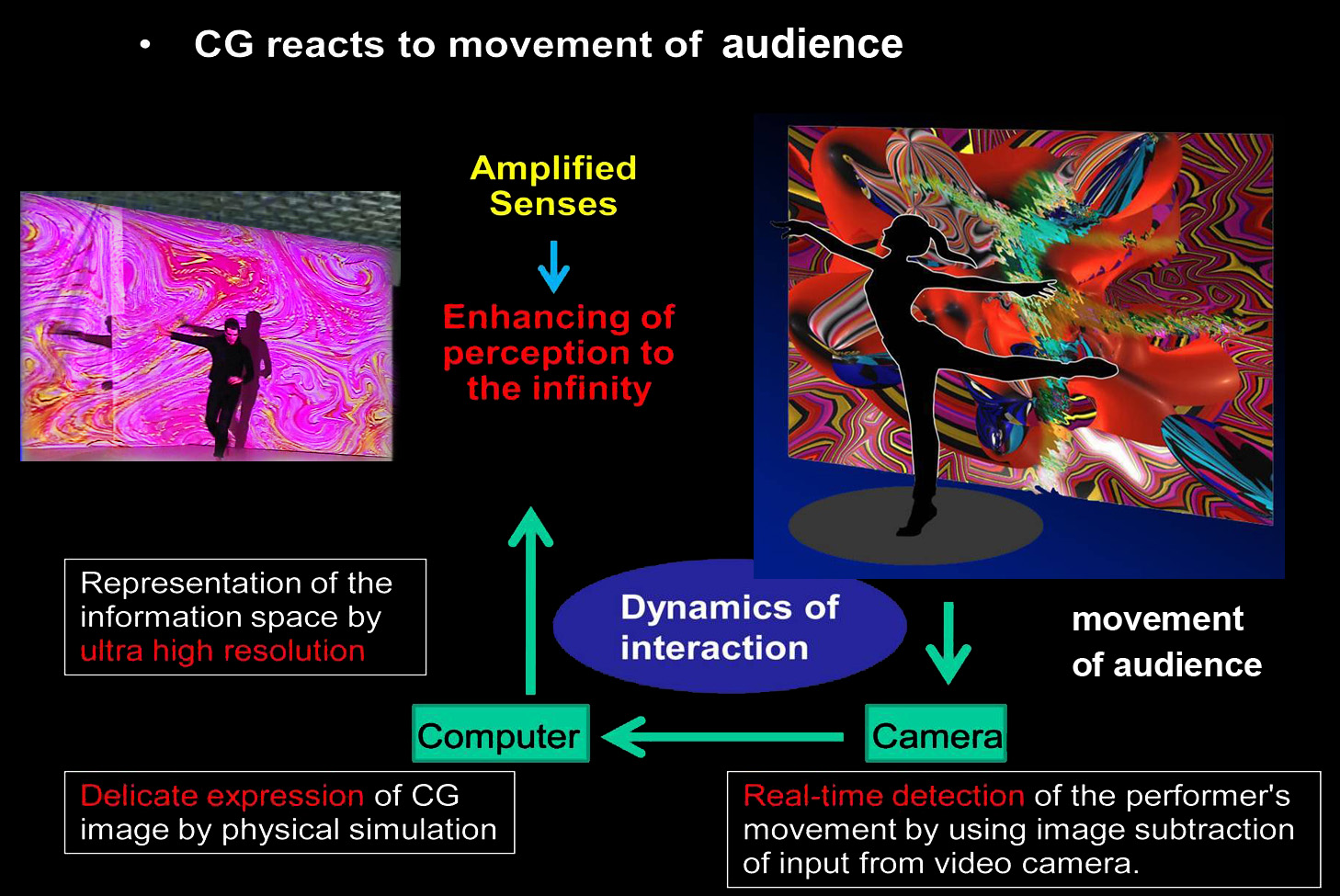

| Prof. Yoichiro Kawaguchi (University of Tokyo) | Creation of Artistic Gemotional Creatures | ||

|

Our aim is development of the biological CG technology by natural modeling beauty, and the expression technology of a high resolution image (Ultra High Definition) and the mechanical modeling technology which reacts overly like a living thing. These technology and traditional performing arts of Japan are interlocked organically, and development of the creation technology of the space for tip-izing as "new traditional performing arts" is performed for "advanced translation into art of scientific beauty" which does not have a similar case in the world, either. |

||

| Prof. Yasuyuki Yanagida (Meijo University) | Multi-sensory Interfaces for Interactions between Real and Virtual Worlds | ||

|

Recently people have become able to play on-line games at various places outside one’s home, thanks to the development of network infrastructures and mobile devices. However, it would be dangerous if most of information is still provided through visual displays, to mobile users in public spaces. We have examined several ways of providing information through non-visual sensory channels, including tactile sensation and olfactory sensation, and developed prototype systems to provide stimuli only to the target user. We have also made a challenge to develop input interfaces, including a first-person pose estimation system for intuitive interaction and an integrated input device that enables seamless switching between pointing and key-input operations. |

||

| Dr. Maki Sugimoto (Keio University) | Development of Kawaii User Interfaces | ||

|

Human interfaces are important factors in designing user experiences in virtual environments such as on-line game worlds. In this project, we propose a fundamental concept: Kawaii User Interface (KUI) with consideration of design aspects on “kawaii” (cuteness). KUI concept contributes to make attractive user interfaces in the field of Human Computer Interaction. In this talk, we introduce "Stickable Bear" as an example of KUIs. In addition, we explain the theory of a projection based measurement device which supports development of applications with KUIs. |

||

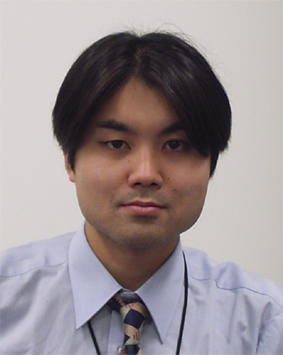

| Prof. Masanori Sugimoto (University of Tokyo) | A Localization Technique for Enhancing Physical Interactions | ||

|

We have so far been investigating a method for designing sound and healthy online games that allow players not to get too immersed to the game world by connecting them to the real world. In the talk, we will present a localization system as one of the core technologies for that purpose. First, we will describe an innovative distance measurement technique using ultrasonic signals. The proposed technique showed its remarkable performance (0.032 mm standard deviation in 3 meter measurements) through experiments, which, to the best of our knowledge, indicates the best performance using narrow band ultrasonic transducers. We also talk about a 3D localization system which is compact and easy to deploy. A gesture recognition system or motion capture system using the proposed technique is also discussed. |

||

| Prof. Shoichi Hasegawa (Tokyo Institute of Technology) | Reactive Virtual Creature with a Selective Attention Model | ||

|

Interactive applications such as Video Games require reactive virtual creatures (characters), which generate motions corresponding to user’s interaction. We propose to generate reactive motion of virtual creature by simulating articulated rigid body model as their body and selective attention model as their mind. |

||

Session 2 Creation of Human-Harmonized Information Technology for Convivial Society (SUNTEC, Room 303) |

|||

| Prof. Susumu Tachi (Keio University) | Construction and Utilization of Human-harmonized "Tangible" Information Environment | ||

|

This project aims to construct an intelligent information environment that is both visible and tangible, where real-space communication, human-machine interface and media processing are integrated. The goal is to create a human-harmonized "tangible information environment" that allows human beings to obtain and understand haptic information in the real space, to transmit thus obtained haptic space, and to actively interact with other people using the transmitted haptic space. The tangible environment enables telecommunication, tele-experience, and pseudo-experience with the sensation of working as though in a natural environment. It also enables humans to engage in creative activities such as design and creation as though they were in the real environment. |

||

| Prof. Masatoshi Ishikawa (University of Tokyo) | Dynamic Information Space based on High-speed Sensor Technology | ||

|

We attempt construction of a new information space allowing humans to recognize phenomena exceeding the limitations of the human senses. Crucial to this effort are: (1) perfect detection of underlying dynamics and (2) a new model of sensory-motor integration drawn from work with kHz-rate sensor and display technologies. Within the information space the sampling rate is matched with the dynamics of the physical world; so humans are able to deterministically predict attributes of the surrounding, rapidly-evolving environment. This leads to a new type of interaction, where the learning rate and capacity of our recognition system are augmented. |

||

| Prof. Yasuharu Koike (Tokyo Institute of Technology) | Elucidation of perceptual illusion and development of sense-centered human interface | ||

|

Tele-existence is trying to replicate physically plausible information by providing with a real sensation of presence. In this project, we aim to elucidate the mechanisms of the perceptual illusion for visco-elastic function in the brain. Perceptual illusion would realize new human interface without elaborate system. |

||

| Prof. Shiro Ise (Kyoto University) | Development of a sound field sharing system for creating and exchanging music | ||

|

The purpose of this study is to build an information communication environment that supports the exchange of music, a universal language, with high fidelity. We develop a sound field sharing system that will help music professionals such as musicians, acoustic engineers, music educators, and music critics to enhance their skills and further explore their creativity by providing them with the means to experience 3D sound in a telecommunication environment. This innovative form of music production using communication technology also provides the general public a platform for a new experience of entertainment. |

||

| Dr. Atsushi Nakazawa (Osaka University) | Equipment and Calibration-Free Eye Gaze Tracking (EGT) using Eye Surface Reflection and High-Framerate Programmable Illumination Projector | ||

|

Eye gaze tracking (EGT) is a key technology for developing an interactive information environment of next generation. However, previous systems require users to attach special hardware such as eye cameras and to perform calibration before using systems. Due to these requirements, EGT has been used mostly for research purposes held in laboratories. My research aims to develop a new eye gaze estimation system to solve these issues and to introduce EGT in everyday environments. Our key technique is the use of eye-surface reflection which comes from environmental light-map. Here, we developed a new LED array projector that illumines an environment with a time-coded pattern and eye cameras capture the reflected images on eye surfaces. Our EGT can be achieved by matching the codes of environment illuminations and eye surface reflections. |

||

JST Exhibition Details

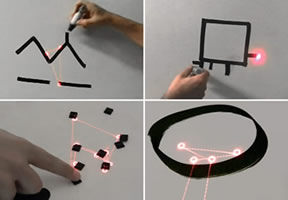

Program :| Score light (Ishikawa Laboratory) | |

|

"""scoreLight"" is a prototype musical instrument capable of generating sound in real time from the lines of doodles as well as from the contours of three-dimensional objects nearby (hands, dancer's silhouette, architectural details, etc). There is no camera nor projector: a laser spot explores the shape as a pick-up head would search for sound over the surface of a vinyl record - with the significant difference that the groove is generated by the contours of the drawing itself. Sound is produced and modulated according to the curvature of the lines being followed, their angle with respect to the vertical as well as their color and contrast. Sound is also spatialized; panning is controlled by the relative position of the tracking spots, their speed and acceleration. ""scoreLight"" implements gesture, shape and color-to-sound artificial synesthesia; abrupt changes in the direction of the lines produce trigger discrete sounds (percussion, glitches), thus creating a rhythmic base (the length of a closed path determines the overall tempo)." |

| Weight perception from vision (Koike Laboratory) | |

|

It is well known that weight perception is influence by object size as Size-Weight illusion. Because weight perception is subjective sensation, it is hard to recognize absolute weight. Also this illusion is caused by another visual cues, such as movement speed, object color, and so on. We have been developing the system which induce pseudo-haptic using visual feedback. |

| RePro3D (Tachi Laboratory) | |

|

RePro3D is a full-parallax 3D display system suitable for interactive 3D applications. The approach is based on a retro-reflective projection technology in which several images from a projector array are displayed on a retro-reflective screen. When viewers look at the screen through a half mirror, they see a 3D image superimposed on the real scene without glasses. RePro3D has a sensor function to recognize user input, so it can support some interactive features, such as manipulation of 3D objects. To display smooth motion parallax, the system uses a high-density array of projection lenses in a matrix on a high-luminance LCD. The array is integrated with an LCD, a half mirror, and a retro-reflector as a screen. An infrared camera senses user input." |

| Pen de Draw (Tachi Laboratory) | |

|

"Pen de Draw" is a 3D modeling system that enables a user to create touchable 3D images as though he/she is drawing images in mid-air. The ungrounded pen-shaped kinesthetic display "Pen de Touch" is used as an interface. The user can create 3D shapes by drawing figures on the virtual canvas and touch the created shapes with the device. |

| Gemotional Art Space (Kawaguchi Laboratory) | |

|

Artistic fluid image based on computer graphics will change depending on the movement of audience. |

| AR character overwriter (Yanagida Laboratory) | |

|

AR character overwriter is a system that replaces a real person in front of a user with a game character. The system consists of a stereo camera, a computer, and a head-mounted display, and it makes use of an image-based posture estimation technology. The image captured by a stereo camera is processed by a computer to estimate the postures of people within the field of view. We require no screens for chroma key nor background image subtraction for posture estimation, which means a user can move freely in general environments. In addition, we do not use active lighting so that our method can also be used in outdoor environments. |

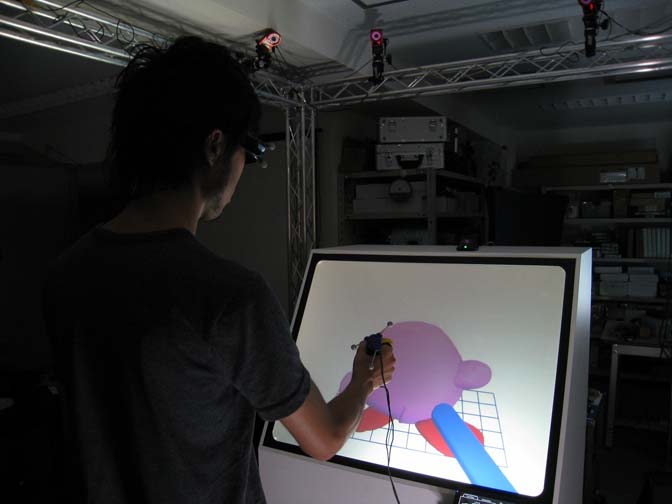

| Haptic Interaction with Reactive Virtual Creature (Hasegawa Laboratory) | |

|

Interactive applications such as Video Games require reactive virtual creatures (characters), which generate motions corresponding to user’s interaction. We propose to generate reactive motion of virtual creature by simulating articulated rigid body model as their body and selective attention model as their mind. In this exhibition you will interact with a virtual teddy-bear through a haptic interface. |

| Life-like Interactive Character for Games and Storytelling (Hoshino Laboratory) | |

|

Building life-like animation character is important for digital storytelling and games. In this demo, we would like to show the architectures of life-like interactive characters acting based on story descriptions. |

| Stickable Bear (Inami Laboratory) | |

|

"Stickable bear" is a portable Robotic User Interface.

This small robot has the degree of freedom in head, arms and feet, and

displays the motion as a gesture. |